Reading Between The Threads: Shader Intrinsics

Introduction

When writing compute shaders, it’s often necessary to communicate values between threads. This is typically done via shared memory. Kepler GPUs introduced “shuffle” intrinsics, which allow threads of a warp to directly read each other’s registers avoiding memory access and synchronization. Shared memory is relatively fast but instructions that operate without using memory of any kind are significantly faster still.

This article discusses those “warp shuffle” and “warp vote” intrinsics and how you can take advantage of them in your DirectX, OpenGL, and Vulkan applications in addition to CUDA. We also provide the ShuffleIntrinsicsVk sample which illustrates basic use cases of those intrinsics.

The intrinsics can be further divided into the following categories:

- warp vote – cross warp predicates

- ballot, all, any

- warp shuffle – cross warp data exchange

- Indexed (any-to-any) shuffle, shuffle up, shuffle down, butterfly (xor) shuffle

- fragment quad swizzle – fragment quad data exchange and arithmetic

- quadshuffle

The vote and shuffle intrinsics (not fragment quad swizzle) are available not just in compute shaders but to all graphics shaders!

How Does Shuffle Help?

There are three main advantages to using warp shuffle and warp vote intrinsics instead of shared memory:

- Shuffle/vote replaces a multi-instruction shared memory sequence with a single instruction that avoids using memory, increasing effective bandwidth and decreasing latency.

- Shuffle/vote does not use any shared memory which is not available in graphics shaders

- Synchronization is within a warp and is implicit in the instruction, so there is no need to synchronize the whole thread block with GroupMemoryBarrierWithGroupSync / groupMemoryBarrier.

Where Can Shuffle Help?

During the port of the EGO® engine to next-gen console, we discovered that warp/wave-level operations enabled substantial optimisations to our light culling system. We were excited to learn that NVIDIA offered production-quality, ready-to-use HLSL extensions to access the same functionality on GeForce GPUs. We were able to exploit the same warp vote and lane access functionality as we had done on console, yielding wins of up to 1ms at 1080p on a GTX 980. We continue to find new optimisations to exploit these intrinsics.

Tom Hammersley, Principal Graphics Programmer, Codemasters Birmingham

There are quite a few algorithms (or building blocks) that use shared memory and could benefit from using shuffle intrinsics:

- Reductions

- Computing min/max/sum across a range of data, like render targets for bloom, depth-of-field, or motion blur.

- Partial reductions can be done per warp using shuffle and then combined with the results from the other warps. This still might involve shared or global memory but at a reduced rate.

- List building

- Light culling, for example might involve shared memory atomics. Their usage can be reduced when computing “slots” per warp or skipped completely if all threads in a warp vote that they don’t need to add any light to the list.

- Sorting

- Shuffle can also be used to implement a faster bitonic sort, especially for data that fits within a warp.

As always, it’s advisable to profile and measure as you are optimizing your shaders!

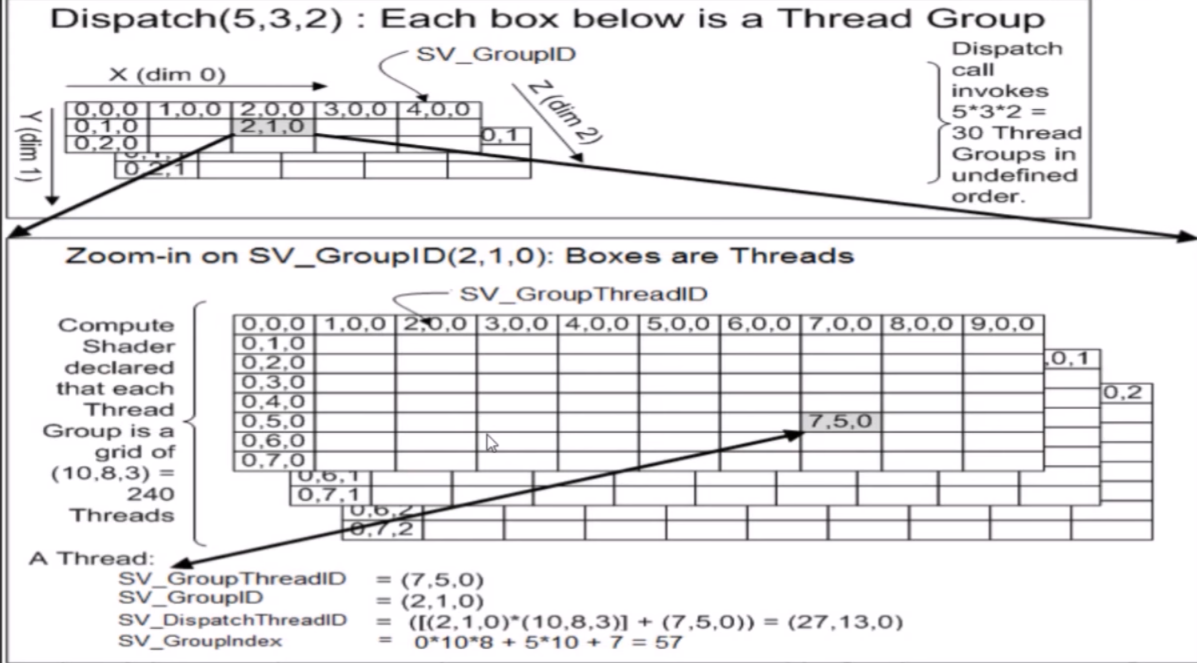

Threads, Warps and SMs

NVIDIA GPUS, such as those from our Pascal generation, are composed of different configurations of Graphics Processing Clusters (GPCs), Streaming Multiprocessors (SMs), and memory controllers. Threads from compute and graphics shaders are organized in groups called warps that execute in lock-step.

On current hardware, a warp has a width of 32 threads. In case of future changes, it’s a good idea to use the following intrinsics to determine the number of threads within a warp as well as the thread index (or lane) within the current warp:

Emulates Shuriken Particle System Unity Interface