using System;

using System.Linq;

using System.Collections.Generic;

using UnityEngine;

namespace VoiceChat

{

[RequireComponent(typeof(AudioSource))]

public class VoiceChatPlayer : MonoBehaviour

{

public event Action PlayerStarted;

float lastTime = 0;

double played = 0;

double received = 0;

int index = 0;

float[] data;

float playDelay = 0;

bool shouldPlay = false;

float lastRecvTime = 0;

NSpeex.SpeexDecoder speexDec = new NSpeex.SpeexDecoder(NSpeex.BandMode.Narrow);

[SerializeField]

[Range(.1f, 5f)]

float playbackDelay = .5f;

[SerializeField]

[Range(1, 32)]

int packetBufferSize = 10;

SortedList<ulong, VoiceChatPacket> packetsToPlay = new SortedList<ulong, VoiceChatPacket>();

public float LastRecvTime

{

get { return lastRecvTime; }

}

void Start()

{

int size = VoiceChatSettings.Instance.Frequency * 10;

GetComponent<AudioSource>().loop = true;

GetComponent<AudioSource>().clip = AudioClip.Create("VoiceChat", size, 1, VoiceChatSettings.Instance.Frequency, false);

data = new float[size];

if (VoiceChatSettings.Instance.LocalDebug)

{

VoiceChatRecorder.Instance.NewSample += OnNewSample;

}

if(PlayerStarted != null)

{

PlayerStarted();

}

}

void Update()

{

if (GetComponent<AudioSource>().isPlaying)

{

// Wrapped around

if (lastTime > GetComponent<AudioSource>().time)

{

played += GetComponent<AudioSource>().clip.length;

}

lastTime = GetComponent<AudioSource>().time;

// Check if we've played to far

if (played + GetComponent<AudioSource>().time >= received)

{

Stop();

shouldPlay = false;

}

}

else

{

if (shouldPlay)

{

playDelay -= Time.deltaTime;

if (playDelay <= 0)

{

GetComponent<AudioSource>().Play();

}

}

}

}

void Stop()

{

GetComponent<AudioSource>().Stop();

GetComponent<AudioSource>().time = 0;

index = 0;

played = 0;

received = 0;

lastTime = 0;

}

public void OnNewSample(VoiceChatPacket newPacket)

{

// Set last time we got something

lastRecvTime = Time.time;

packetsToPlay.Add(newPacket.PacketId, newPacket);

if (packetsToPlay.Count < 10)

{

return;

}

var pair = packetsToPlay.First();

var packet = pair.Value;

packetsToPlay.Remove(pair.Key);

// Decompress

float[] sample = null;

int length = VoiceChatUtils.Decompress(speexDec, packet, out sample);

// Add more time to received

received += VoiceChatSettings.Instance.SampleTime;

// Push data to buffer

Array.Copy(sample, 0, data, index, length);

// Increase index

index += length;

// Handle wrap-around

if (index >= GetComponent<AudioSource>().clip.samples)

{

index = 0;

}

// Set data

GetComponent<AudioSource>().clip.SetData(data, 0);

// If we're not playing

if (!GetComponent<AudioSource>().isPlaying)

{

// Set that we should be playing

shouldPlay = true;

// And if we have no delay set, set it.

if (playDelay <= 0)

{

playDelay = (float)VoiceChatSettings.Instance.SampleTime * playbackDelay;

}

}

VoiceChatFloatPool.Instance.Return(sample);

}

}

}

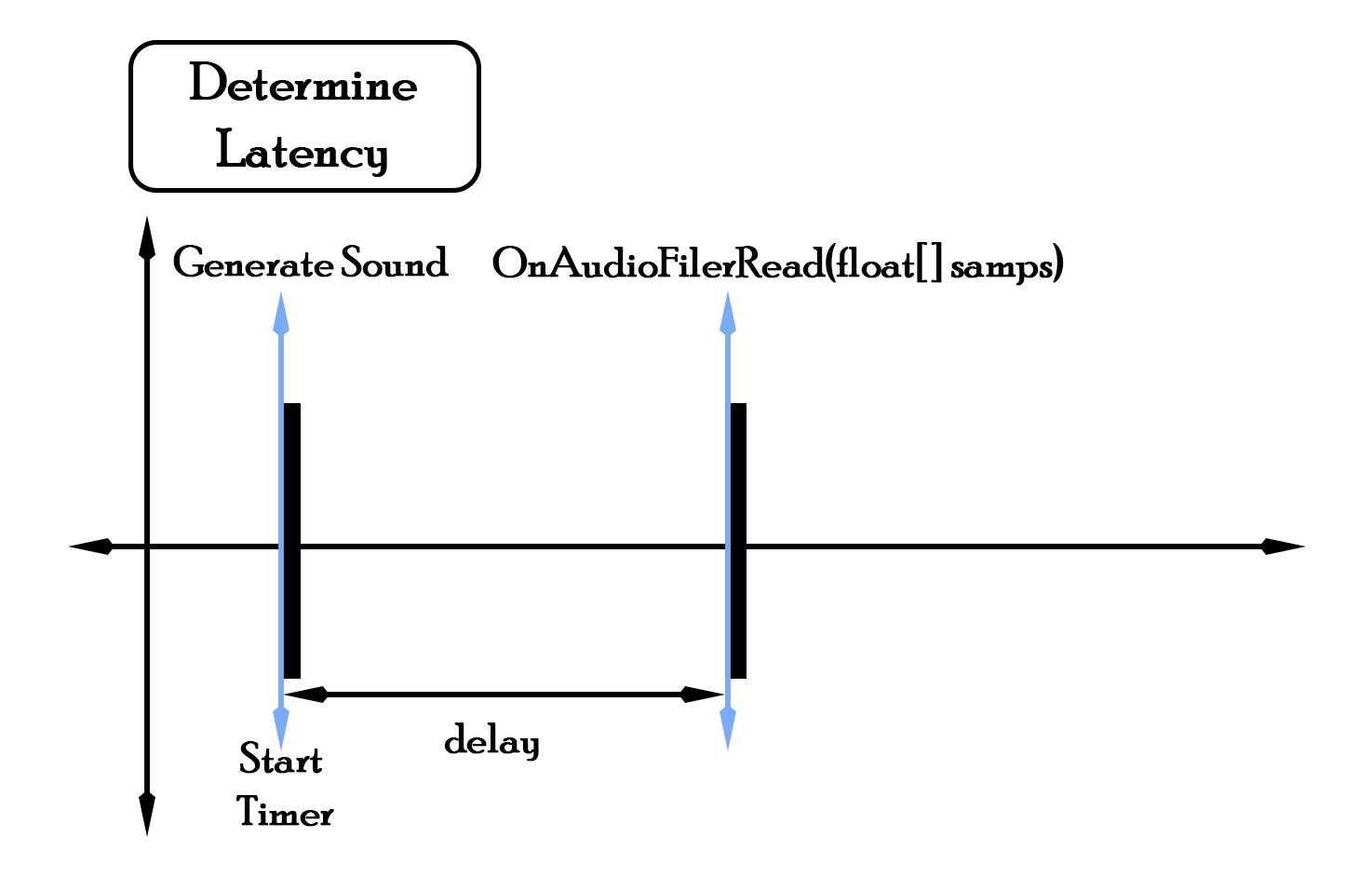

https://developer.oculus.com/documentation/audiosdk/latest/concepts/osp-unity-mobile-latency/

using UnityEngine;

using System.Collections;

using UnityEngine.UI;

using System;

[RequireComponent(typeof(AudioSource))]

public class MicrophoneInput : MonoBehaviour

{

AudioSource audioSource;

string micName;

public float freq;

public int globalSampleRate;

public int MICPOSITION;

public int _record_Head = 0;

public int _play_head = 0;

[Range(0.00f, 1.5f)]

public float echoTime;

[Range(-0.5f, 0.5f)]

public float delayTime = 0f;

[Range(0f, 1f)]

public float echoAttenuation;

private float[] echoBuffer = new float[(int)(48000 * 2 * 1.5f)];

void Start()

{

audioSource = GetComponent<AudioSource>();

globalSampleRate = AudioSettings.outputSampleRate;

foreach (string device in Microphone.devices)

{

Debug.Log("Name: " + device);

}

micName = Microphone.devices[0];

globalSampleRate = AudioSettings.outputSampleRate;

audioSource.clip = Microphone.Start(null, true, 10, globalSampleRate);

audioSource.loop = true;

//while (!(Microphone.GetPosition(micName) > 0)) { } // Wait until the recording has started

////audioSource.time = audioSource.clip.length - .03f;

//audioSource.PlayDelayed(0.01f); // Play the audio source!

}

bool started = false;

void Update()

{

if(!started)

{

while (!(Microphone.GetPosition(micName) > 0)) { } // Wait until the recording has started

//audioSource.time = audioSource.clip.length - .03f;

audioSource.PlayDelayed(0.01f); // Play the audio source!

started = true;

}

MICPOSITION = Microphone.GetPosition(micName);

}

double accumTime;

void OnAudioFilterRead(float[] data, int channels)

{

accumTime = (double)AudioSettings.dspTime;

double samples = AudioSettings.dspTime * globalSampleRate;

int dataLen = data.Length / channels;

double deltTime = (AudioSettings.dspTime / samples);

int n = 0;

float x;

while (n < dataLen)

{

int i = 0;

while (i < channels)

{

x = data[n * channels + i];

record(x + playback(delayTime));

data[n * channels + i] = x;

i++;

}

n++;

}

}

private void record(float samp)

{

if (++_record_Head > echoBuffer.Length - 1) _record_Head = 0;

echoBuffer[_record_Head] = samp;

}

private float playback()

{

_play_head = (int)(_record_Head - (echoTime * globalSampleRate * 2f));

if (_play_head < 0) _play_head = (int)(1.5f * globalSampleRate * 2f) + _play_head;

return echoBuffer[_play_head] * echoAttenuation;

}

private float playback(float delay)

{

int delay_head = (int)(_record_Head + (delayTime * globalSampleRate * 2f));

if (delay_head < 0) delay_head = (int)(1.5f * globalSampleRate * 2f) + delay_head;

if (delay_head > echoBuffer.Length - 1) delay_head = delay_head - (echoBuffer.Length - 1);

_play_head = (int)(delay_head - (echoTime * globalSampleRate * 2f));

if (_play_head < 0) _play_head = (int)(1.5f * globalSampleRate * 2f) + _play_head;

return echoBuffer[_play_head] * echoAttenuation;

}

}

using UnityEngine;

using System.Collections;

using UnityEngine.UI;

using System;

[RequireComponent(typeof(AudioSource))]

public class MicrophoneInput : MonoBehaviour

{

AudioSource audioSource;

string micName;

public float freq;

public int micSampleRate = 11024;

public int globalSampleRate;

public double dt;

[Range(0.002f, 0.005f)]

public float dTime = 0.005f;

[Range(0, 5)]

public double gain = 1;

[Range(0, 1)]

public double gain1 = 1;

[Range(0, 1)]

public double gain2 = 1;

[Range(0, 1)]

public double gain3 = 1;

[Range(0.00f, 20f)]

public double multInterval1 = 1;

[Range(0.00f, 20f)]

public double multInterval2 = 1;

[Range(0.00f, 20f)]

public double multInterval3 = 1;

[Range(0.01f, 0.02f)]

public double phase1base = 0.01;

[Range(0.01f, 0.02f)]

public double phase2base = 0.01;

[Range(0.01f, 0.02f)]

public double phase3base = 0.01;

[Range(0.0f, 1f)]

public double attack1 = 0.01;

[Range(0.0f, 1f)]

public double decay1 = 0.01;

[Range(0.0f, 1f)]

public double attack2 = 0.01;

[Range(0.0f, 1f)]

public double decay2 = 0.01;

[Range(0.0f, 1f)]

public double attack3 = 0.01;

[Range(0.0f, 1f)]

public double decay3 = 0.01f;

public bool bVoiceHigh = true;

public bool bVoiceMid = true;

public bool bVoiceLow = true;

double phase1;

double phase2;

double phase3;

double amp1;

double amp2;

double amp3;

private double nextTick = 0.0F;

private double amp = 0.0F;

private double phase = 0.0F;

private int accent;

private bool running = false;

//B3 0.004124562 242.45

//C4 0.00389302 256.87

// C#4/Db4 0.003674579 272.14

//D4 0.003468248 288.33

//D#4/Eb4 0.003273644 305.47

//E4 0.003089948 323.63

//F4 0.002916472 342.88

// F#4/Gb4 0.002752773 363.27

//G4 0.00259828 384.87

//G#4/Ab4 0.002452483 407.75

//A4 0.002314815 432

//A#4/Bb4 0.002184885 457.69

//B4 0.002062281 484.9

//C5 0.00194651 513.74

private float[] _freqs = { 249.66f, 264.505f, 280.235f, 296.9f, 314.55f, 333.255f, 353.075f, 374.07f, 396.31f, 419.875f, 444.845f, 471.295f, 499.32f };

private float[] _dtime = { 0.004124562f, 0.00389302f, 0.003674579f, 0.003468248f, 0.003273644f, 0.003089948f, 0.002916472f,

0.002752773f, 0.00259828f, 0.002452483f, 0.002314815f, 0.002184885f, 0.002062281f};

//249.66 0.004124562

//264.505 0.00389302

//280.235 0.003674579

//296.9 0.003468248

//314.55 0.003273644

//333.255 0.003089948

//353.075 0.002916472

//374.07 0.002752773

//396.31 0.00259828

//419.875 0.002452483

//444.845 0.002314815

//471.295 0.002184885

//499.32 0.002062281

//256.87

private int _record_Head = 0;

private int _play_head = 0;

[Range(0.00f, 1.5f)]

public float echoTime;

[Range(-0.5f,0.5f)]

public float delayTime = 0f;

[Range(0f, 1f)]

public float echoAttenuation;

private float[] echoBuffer = new float[(int)(48000 * 2 * 1.5f)];

void Start()

{

audioSource = GetComponent<AudioSource>();

foreach (string device in Microphone.devices)

{

Debug.Log("Name: " + device);

}

micName = Microphone.devices[0];

audioSource.clip = Microphone.Start(null, true, 10, micSampleRate);

audioSource.loop = true; // Set the AudioClip to loop

//audioSource.mute = true; // Mute the sound, we don't want the player to hear it

while (!(Microphone.GetPosition(micName) > 0)) { } // Wait until the recording has started

audioSource.time = audioSource.clip.length - .03f;

audioSource.Play(); // Play the audio source!

globalSampleRate = AudioSettings.outputSampleRate;

}

void Update()

{

freq = GetFundamentalFrequency();

}

float GetFundamentalFrequency()

{

float fundamentalFrequency = 0.0f;

float[] data = new float[8192];

audioSource.GetSpectrumData(data, 0, FFTWindow.BlackmanHarris);

float s = 0.0f;

int i = 0;

for (int j = 0; j <1200; j++)

{

if (s < data[j])

{

s = data[j];

i = j;

}

}

fundamentalFrequency = 1.08688f*(i * 2* (float)micSampleRate / 8192);

return fundamentalFrequency;

}

int count1 = 0;

int count2 = 0;

int count3 = 0;

double phasemult1 = 3.0f;

double phasemult2 = 2.0f;

double phasemult3 = 1.0f;

bool switch1 = true;

bool switch2 = true;

bool switch3 = true;

double time1;

double time2;

double time3;

double accumTime;

bool aOn = true;

double ampSamp(int voice, double sampTime)

{

amp = 0;

if (voice == 1)

{

if (sampTime - time1 < attack1)

{

amp = CubicEaseIn(sampTime - time1, 0, 1, attack1);

}

else if (accumTime - time1 < attack1 + decay1)

{

amp = CubicEaseOut((sampTime - time1 - attack1), 1, -1, decay1);

}

else

{

amp = 0;

}

}

if (voice == 2)

{

if (sampTime - time2 < attack2)

{

amp = CubicEaseIn(sampTime - time2, 0, 1, attack2);

}

else if (accumTime - time2 < attack2 + decay2)

{

amp = CubicEaseOut((sampTime - time2 - attack2), 1, -1, decay2);

}

else

{

amp = 0;

}

}

if (voice == 3)

{

if (sampTime - time3 < attack3)

{

amp = CubicEaseIn(sampTime - time3, 0, 1, attack3);

}

else if (accumTime - time3 < attack3 + decay3)

{

amp = CubicEaseOut((sampTime - time3 - attack3), 1, -1, decay3);

}

else

{

amp = 0;

}

}

return amp;

}

double WDT = 1; //Why Do This?

float dTIME = 0f;

void OnAudioFilterRead(float[] data, int channels)

{

accumTime = (double)AudioSettings.dspTime;

//private float[] _freqs = { 249.66f, 264.505f, 280.235f, 296.9f, 314.55f, 333.255f, 353.075f, 374.07f, 396.31f, 419.875f, 444.845f, 471.295f,499.32f };

// private float[] _dtime = { 0.004124562f, 0.00389302f, 0.003674579f, 0.003468248f, 0.003273644f, 0.003089948f, 0.002916472f,

// 0.002752773f, 0.00259828f, 0.002452483f, 0.002314815f, 0.002184885f, 0.002062281f};

//float dTIME = dTime;

//for (int i = 0; i<12; i++)

//{

// if(freq > 2*_freqs[i] && freq < 2*_freqs[i+1])

// {

// dTIME = _dtime[i + 1];

// }

//}

// phase1 = dTime * 2f * Mathf.PI / globalSampleRate;

if (count1++ > 10 * multInterval1)

{

time1 = accumTime;//Attack

if (switch1)

{

if (++phasemult1 > 11)

{

switch1 = false;

}

}

else

{

if (--phasemult1 < 2)

{

switch1 = true;

}

}

count1 = 0;

dTIME = _dtime[(int)phasemult1];

}

//if (count2++ > 10* multInterval2)

//{

// time2 = accumTime;//Attack

// if (switch2)

// {

// if (++phasemult2 > 4)

// {

// switch2= false;

// }

// }

// else

// {

// if (--phasemult2 < 4)

// {

// switch2 = true;

// }

// }

// count2 = 0;

//}

//if (count3++ > 10*multInterval3)

//{

// time3 = accumTime;//Attack

// if (switch3)

// {

// if (++phasemult3 > 2)

// {

// switch3 = false;

// }

// }

// else

// {

// if (--phasemult3 < 2)

// {

// switch3 = true;

// }

// }

// count3 = 0;

//}

double samples = AudioSettings.dspTime * globalSampleRate;

int dataLen = data.Length / channels;

double deltTime = (AudioSettings.dspTime / samples);

int n = 0;

double a, b, c;

double sampTime = accumTime;

dt = deltTime/ dTIME * 2f * Mathf.PI ;

while (n < dataLen)

{

sampTime += deltTime;

if (bVoiceHigh) a = WDT * gain * /*ampSamp(1, sampTime)*/ Math.Sin(phase1); else a = 0;//ampSamp(1,sampTime)

if (bVoiceMid) b = WDT * gain * /*ampSamp(2, sampTime) */ Math.Sin(phase2); else b = 0;

if (bVoiceLow) c = WDT * gain * /*ampSamp(3, sampTime) */ Math.Sin(phase3); else c = 0;

float x = (float)(gain1*a + gain2*b + gain3*c);

int i = 0;

while (i < channels)

{

record(data[n * channels + i] + playback(delayTime));

//data[n * channels + i] = (float)a;

data[n * channels + i] = playback();

i++;

}

phase1 += dt;

if (phase1 > Math.PI * 2f) phase1 = 0f;

phase2 += phase2base * phasemult2;

if (phase2 > Math.PI * 2f) phase2 = 0f;

phase3 += phase3base * phasemult3;

if (phase3 > Math.PI * 2f) phase3 = 0f;

n++;

}

}

/// </summary>

/// <param name= t "current">how long into the ease are we</param>

/// <param name= b "initialValue">starting value if current were 0</param>

/// <param name= c "totalChange">total change in the value (not the end value, but the end - start)</param>

/// <param name= d "duration">the total amount of time (when current == duration, the returned value will == initial + totalChange)</param>

/// <returns></returns>

private void record(float samp)

{

if (++_record_Head > echoBuffer.Length-1) _record_Head = 0;

echoBuffer[_record_Head] = samp;

}

private float playback()

{

_play_head = (int)(_record_Head - (echoTime * globalSampleRate * 2f));

if (_play_head < 0) _play_head = (int)(1.5f * globalSampleRate * 2f) + _play_head;

return echoBuffer[_play_head] * echoAttenuation;

}

private float playback(float delay)

{

int delay_head = (int)(_record_Head + (delayTime* globalSampleRate * 2f));

if(delay_head < 0) delay_head = (int)(1.5f * globalSampleRate * 2f) + delay_head;

if (delay_head > echoBuffer.Length - 1) delay_head = delay_head - (echoBuffer.Length - 1);

_play_head = (int)(delay_head - (echoTime * globalSampleRate * 2f));

if (_play_head < 0) _play_head = (int)(1.5f * globalSampleRate * 2f) + _play_head;

return echoBuffer[_play_head] * echoAttenuation;

}

public static double CubicEaseOut(double t, double b, double c, double d)

{

if (t < d)

{

return c * ((t = t / d - 1) * t * t + 1) + b;

}

else

{

return b + c;

}

}

public static double CubicEaseIn(double t, double b, double c, double d)

{

if (t < d)

{

return c * (t /= d) * t * t + b;

}

else

{

return b + c;

}

}

}

//public class MicrophoneInput : MonoBehaviour

//{

// public double sensitivity = 100;

// public double loudness = 0;

// public Text dbg_Text;

// string micName;

// AudioSource audioSource;

// void Start()

// {

// audioSource = GetComponent<AudioSource>();

// foreach (string device in Microphone.devices)

// {

// Debug.Log("Name: " + device);

// }

// micName = Microphone.devices[0];

// audioSource.clip = Microphone.Start(null, true, 10, 44100);

// audioSource.loop = true; // Set the AudioClip to loop

// //audioSource.mute = true; // Mute the sound, we don't want the player to hear it

// while (!(Microphone.GetPosition(micName) > 0)) { } // Wait until the recording has started

// audioSource.Play(); // Play the audio source!

// }

// void Update()

// {

// //if (audioSource.isPlaying == false && Microphone.GetPosition(micName) > 1)

// //{

// // audioSource.Play();

// //}

// loudness = GetAveragedVolume() * sensitivity;

// dbg_Text.text = "Loudness = " + loudness.ToString("F8");

// }

// double GetAveragedVolume()

// {

// double[] data = new double[256];

// double a = 0;

// GetComponent<AudioSource>().GetOutputData(data, 0);

// foreach (double s in data)

// {

// a += Mathf.Abs(s);

// }

// return a / 256;

// }

//}

GearVR PipeLine

For Test:

http://argos.vu/ArgosVu_ARVR_Sample.apk

target_stones_USLetter

File Size :1.0MB(1054497Bytes)

Date Taken :2003/04/28 14:35:39

Image Size :2048 x 1536

Resolution :300 x 300 dpi

Number of Bits :8bit/channel

Protection Attribute :Off

Hide Attribute :Off

Camera ID :N/A

Camera :E885

Quality Mode :N/A

Metering Mode :Matrix

Exposure Mode :Programmed Auto

Speed Light :Yes

Focal Length :8 mm

Shutter Speed :1/209.8second

Aperture :F7.6

Exposure Compensation :0 EV

White Balance :N/A

Lens :N/A

Flash Sync Mode :N/A

Exposure Difference :N/A

Flexible Program :N/A

Sensitivity :N/A

Sharpening :N/A

Image Type :Color

Color Mode :N/A

Hue Adjustment :N/A

Saturation Control :N/A

Tone Compensation :N/A

Latitude(GPS) :N/A

Longitude(GPS) :N/A

Altitude(GPS) :N/A

![Screenshot_2016-06-06-00-44-25[1]](http://argos.vu/wp-content/uploads/2016/03/Screenshot_2016-06-06-00-44-251.png)