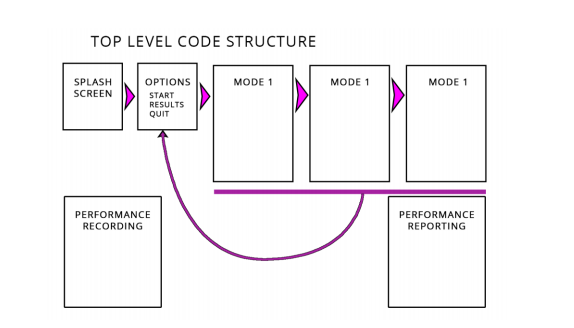

Splash

Into Environment – Default Settings – (init config)

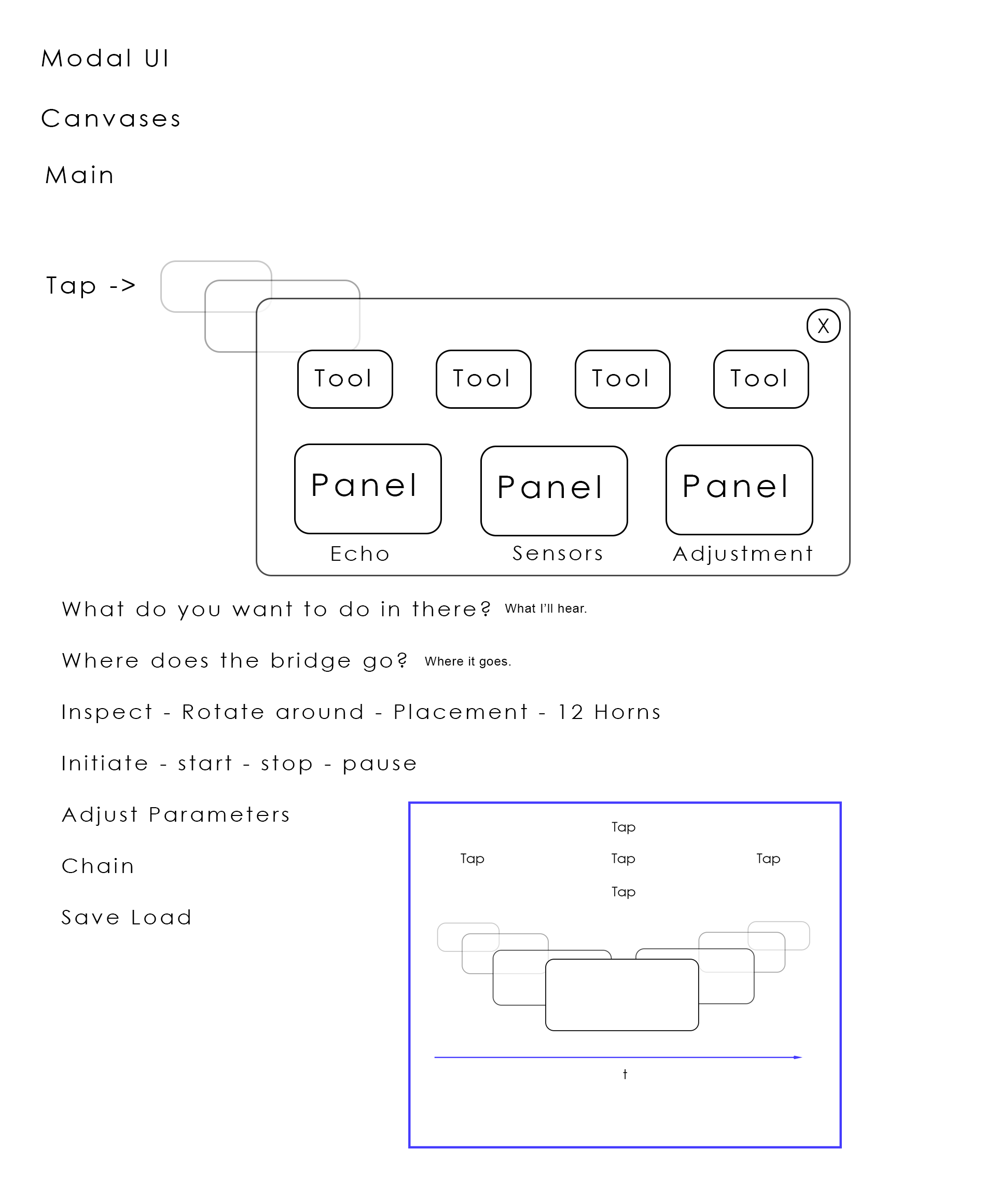

Tap – brings up Main Menu. (optional info dialogs – modal dialog – on by default)

Tools – four on top

0) default navigation – blue circle – navigation menu active

1) grabber – selects object holds and places at desired location Menus/waypoints/transforms

2) paint – current paint mode

3) operating mode

ParticleGazeCursor

using UnityEngine;

using System.Collections;

[RequireComponent(typeof(OVRGazePointer))]

public class ParticleGazeCursor : MonoBehaviour

{

public float emissionScale;

public float maxSpeed;

[Header("Particle emission curves")]

// The scale on the x axis of the curves runs from 0 to maxSpeed

[Tooltip("Curve for trailing edge of pointer")]

public AnimationCurve halfEmission;

[Tooltip("Curve for full perimeter of pointer")]

public AnimationCurve fullEmission;

[Tooltip("Curve for full perimeter of pointer")]

public bool particleTrail;

public float particleScale = 0.68f;

Vector3 lastPos;

ParticleSystem psHalf;

ParticleSystem psFull;

MeshRenderer quadRenderer;

Color particleStartColor;

OVRGazePointer gazePointer;

// Use this for initialization

void Start()

{

gazePointer = GetComponent<OVRGazePointer>();

foreach (Transform child in transform)

{

if (child.name.Equals("Half"))

psHalf = child.GetComponent<ParticleSystem>();

if (child.name.Equals("Full"))

psFull = child.GetComponent<ParticleSystem>();

if (child.name.Equals("Quad"))

quadRenderer = child.GetComponent<MeshRenderer>();

}

float scale = transform.lossyScale.x;

psHalf.startSize *= scale;

psHalf.startSpeed *= scale;

psFull.startSize *= scale;

psFull.startSpeed *= scale;

particleStartColor = psFull.startColor;

if (!particleTrail)

{

GameObject.Destroy(psHalf);

GameObject.Destroy(psFull);

}

}

// Update is called once per frame

void Update()

{

var delta = GetComponent<OVRGazePointer>().positionDelta;

if (particleTrail)

{

// Evaluate these curves to decide the emission rate of the two sources of particles.

psHalf.emissionRate = halfEmission.Evaluate((delta.magnitude / Time.deltaTime) / maxSpeed) * emissionScale;

psFull.emissionRate = fullEmission.Evaluate((delta.magnitude / Time.deltaTime) / maxSpeed) * emissionScale;

// Make the particles fade out with visibitly the same way the main ring does

Color color = particleStartColor;

color.a = gazePointer.visibilityStrength;

psHalf.startColor = color;

psFull.startColor = color;

// Particles also scale when the gaze pointer scales

psFull.startSize = particleScale * transform.lossyScale.x;

psHalf.startSize = particleScale * transform.lossyScale.x;

}

// Set the main pointers alpha value to the correct level to achieve the desired level of fade

quadRenderer.material.SetColor("_TintColor",new Color(1, 1, 1, gazePointer.visibilityStrength));

}

}

OVRGazePointer

using UnityEngine;

using System.Collections;

public class OVRGazePointer : MonoBehaviour {

[Tooltip("Should the pointer be hidden when not over interactive objects.")]

public bool hideByDefault = true;

[Tooltip("Time after leaving interactive object before pointer fades.")]

public float showTimeoutPeriod = 1;

[Tooltip("Time after mouse pointer becoming inactive before pointer unfades.")]

public float hideTimeoutPeriod = 0.1f;

[Tooltip("Keep a faint version of the pointer visible while using a mouse")]

public bool dimOnHideRequest = true;

[Tooltip("Angular scale of pointer")]

public float depthScaleMultiplier = 0.03f;

[Tooltip("Used for positioning pointer in scene")]

public OVRCameraRig cameraRig;

/// <summary>

/// Is gaze pointer current visible

/// </summary>

public bool hidden { get; private set; }

/// <summary>

/// Current scale applied to pointer

/// </summary>

public float currentScale { get; private set; }

/// <summary>

/// Current depth of pointer from camera

/// </summary>

private float depth;

/// <summary>

/// How many times position has been set this frame. Used to detect when there are no position sets in a frame.

/// </summary>

private int positionSetsThisFrame = 0;

/// <summary>

/// Position last frame.

/// </summary>

private Vector3 lastPosition;

/// <summary>

/// Last time code requested the pointer be shown. Usually when pointer passes over interactive elements.

/// </summary>

private float lastShowRequestTime;

/// <summary>

/// Last time pointer was requested to be hidden. Usually mouse pointer activity.

/// </summary>

private float lastHideRequestTime;

// How much the gaze pointer moved in the last frame

private Vector3 _positionDelta;

public Vector3 positionDelta { get { return _positionDelta; } }

private static OVRGazePointer _instance;

public static OVRGazePointer instance

{

// If there's no GazePointer already in the scene, instanciate one now.

get

{

if (_instance == null)

{

Debug.Log(string.Format("Instanciating GazePointer", 0));

_instance = (OVRGazePointer)GameObject.Instantiate((OVRGazePointer)Resources.Load("Prefabs/GazePointerRing", typeof(OVRGazePointer)));

}

return _instance;

}

}

public float visibilityStrength

{

get

{

float strengthFromShowRequest;

if (hideByDefault)

{

strengthFromShowRequest = Mathf.Clamp01(1 - (Time.time - lastShowRequestTime) / showTimeoutPeriod);

}

else

{

strengthFromShowRequest = 1;

}

// Now consider factors requesting pointer to be hidden

float strengthFromHideRequest;

if (dimOnHideRequest)

{

strengthFromHideRequest = (lastHideRequestTime + hideTimeoutPeriod > Time.time) ? 0.1f : 1;

}

else

{

strengthFromHideRequest = (lastHideRequestTime + hideTimeoutPeriod > Time.time) ? 0 : 1;

}

// Hide requests take priority

return Mathf.Min(strengthFromShowRequest, strengthFromHideRequest);

}

}

private void Awake()

{

currentScale = 1;

// Only allow one instance at runtime.

if (_instance != null && _instance != this)

{

enabled = false;

DestroyImmediate(this);

return;

}

_instance = this;

}

// Update is called once per frame

void Update () {

// Even if this runs after SetPosition, it will work out to be the same position

// Keep pointer at same distance from camera rig

transform.position = cameraRig.centerEyeAnchor.transform.position + cameraRig.centerEyeAnchor.transform.forward * depth;

if (visibilityStrength == 0 && !hidden)

{

Hide();

}

else if (visibilityStrength > 0 && hidden)

{

Show();

}

}

/// <summary>

/// Set position and orientation of pointer

/// </summary>

/// <param name="pos"></param>

/// <param name="normal"></param>

public void SetPosition(Vector3 pos, Vector3 normal)

{

transform.position = pos;

// Set the rotation to match the normal of the surface it's on. For the other degree of freedom use

// the direction of movement so that trail effects etc are easier

Quaternion newRot = transform.rotation;

newRot.SetLookRotation(normal, Vector3.up);//(lastPosition - transform.position).normalized

transform.rotation = newRot;

// record depth so that distance doesn't pop when pointer leaves an object

depth = (cameraRig.centerEyeAnchor.transform.position - pos).magnitude;

//set scale based on depth

currentScale = depth * depthScaleMultiplier;

transform.localScale = new Vector3(currentScale, currentScale, currentScale);

positionSetsThisFrame++;

}

/// <summary>

/// SetPosition overload without normal. Just makes cursor face user

/// </summary>

/// <param name="pos"></param>

public void SetPosition(Vector3 pos)

{

SetPosition(pos, cameraRig.centerEyeAnchor.transform.forward);

}

void LateUpdate()

{

// This happens after all Updates so we know nothing set the position this frame

if (positionSetsThisFrame == 0)

{

// No geometry intersections, so gazing into space. Make the cursor face directly at the camera

Quaternion newRot = transform.rotation;

newRot.SetLookRotation(cameraRig.centerEyeAnchor.transform.forward, Vector3.up);//(lastPosition - transform.position).normalized

transform.rotation = newRot;

}

// Keep track of cursor movement direction

_positionDelta = transform.position - lastPosition;

lastPosition = transform.position;

positionSetsThisFrame = 0;

}

/// <summary>

/// Request the pointer be hidden

/// </summary>

public void RequestHide()

{

if (!dimOnHideRequest)

{

Hide();

}

lastHideRequestTime = Time.time;

}

/// <summary>

/// Request the pointer be shown. Hide requests take priority

/// </summary>

public void RequestShow()

{

Show();

lastShowRequestTime = Time.time;

}

private void Hide()

{

foreach (Transform child in transform)

{

child.gameObject.SetActive(false);

}

if (GetComponent<Renderer>())

GetComponent<Renderer>().enabled = false;

hidden = true;

}

private void Show()

{

foreach (Transform child in transform)

{

child.gameObject.SetActive(true);

}

if (GetComponent<Renderer>())

GetComponent<Renderer>().enabled = true;

hidden = false;

}

}

OVRRaycaster

using System;

using System.Collections;

using System.Collections.Generic;

using System.Text;

using UnityEngine;

using UnityEngine.UI;

using UnityEngine.EventSystems;

using UnityEngine.Serialization;

[RequireComponent(typeof(Canvas))]

public class OVRRaycaster : GraphicRaycaster, IPointerEnterHandler

{

[Tooltip("A world space pointer for this canvas")]

public GameObject pointer;

protected OVRRaycaster()

{ }

[NonSerialized]

private Canvas m_Canvas;

private Canvas canvas

{

get

{

if (m_Canvas != null)

return m_Canvas;

m_Canvas = GetComponent<Canvas>();

return m_Canvas;

}

}

public override Camera eventCamera

{

get

{

return canvas.worldCamera;

}

}

/// <summary>

/// For the given ray, find graphics on this canvas which it intersects and are not blocked by other

/// world objects

/// </summary>

[NonSerialized]

private List<RaycastHit> m_RaycastResults = new List<RaycastHit>();

private void Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList, Ray ray, bool checkForBlocking)

{

//This function is closely based on

//void GraphicRaycaster.Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList)

if (canvas == null)

return;

float hitDistance = float.MaxValue;

if (checkForBlocking && blockingObjects != BlockingObjects.None)

{

float dist = eventCamera.farClipPlane;

if (blockingObjects == BlockingObjects.ThreeD || blockingObjects == BlockingObjects.All)

{

var hits = Physics.RaycastAll(ray, dist, m_BlockingMask);

if (hits.Length > 0 && hits[0].distance < hitDistance)

{

hitDistance = hits[0].distance;

}

}

if (blockingObjects == BlockingObjects.TwoD || blockingObjects == BlockingObjects.All)

{

var hits = Physics2D.GetRayIntersectionAll(ray, dist, m_BlockingMask);

if (hits.Length > 0 && hits[0].fraction * dist < hitDistance)

{

hitDistance = hits[0].fraction * dist;

}

}

}

m_RaycastResults.Clear();

GraphicRaycast(canvas, ray, m_RaycastResults);

for (var index = 0; index < m_RaycastResults.Count; index++)

{

var go = m_RaycastResults[index].graphic.gameObject;

bool appendGraphic = true;

if (ignoreReversedGraphics)

{

// If we have a camera compare the direction against the cameras forward.

var cameraFoward = ray.direction;

var dir = go.transform.rotation * Vector3.forward;

appendGraphic = Vector3.Dot(cameraFoward, dir) > 0;

}

// Ignore points behind us (can happen with a canvas pointer)

if (eventCamera.transform.InverseTransformPoint(m_RaycastResults[index].worldPos).z <= 0)

{

appendGraphic = false;

}

if (appendGraphic)

{

float distance = Vector3.Distance(ray.origin, m_RaycastResults[index].worldPos);

if (distance >= hitDistance)

{

continue;

}

var castResult = new RaycastResult

{

gameObject = go,

module = this,

distance = distance,

index = resultAppendList.Count,

depth = m_RaycastResults[index].graphic.depth,

worldPosition = m_RaycastResults[index].worldPos

};

resultAppendList.Add(castResult);

}

}

}

/// <summary>

/// Performs a raycast using eventData.worldSpaceRay

/// </summary>

/// <param name="eventData"></param>

/// <param name="resultAppendList"></param>

public override void Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList)

{

OVRRayPointerEventData rayPointerEventData = eventData as OVRRayPointerEventData;

if (rayPointerEventData != null)

{

Raycast(eventData, resultAppendList, rayPointerEventData.worldSpaceRay, true);

}

}

/// <summary>

/// Performs a raycast using the pointer object attached to this OVRRaycaster

/// </summary>

/// <param name="eventData"></param>

/// <param name="resultAppendList"></param>

public void RaycastPointer(PointerEventData eventData, List<RaycastResult> resultAppendList)

{

if (pointer != null)

{

Raycast(eventData, resultAppendList, new Ray(eventCamera.transform.position, (pointer.transform.position - eventCamera.transform.position).normalized), false);

}

}

/// <summary>

/// Perform a raycast into the screen and collect all graphics underneath it.

/// </summary>

[NonSerialized]

static readonly List<RaycastHit> s_SortedGraphics = new List<RaycastHit>();

private void GraphicRaycast(Canvas canvas, Ray ray, List<RaycastHit> results)

{

//This function is based closely on :

// void GraphicRaycaster.Raycast(Canvas canvas, Camera eventCamera, Vector2 pointerPosition, List<Graphic> results)

// But modified to take a Ray instead of a canvas pointer, and also to explicitly ignore

// the graphic associated with the pointer

// Necessary for the event system

var foundGraphics = GraphicRegistry.GetGraphicsForCanvas(canvas);

s_SortedGraphics.Clear();

for (int i = 0; i < foundGraphics.Count; ++i)

{

Graphic graphic = foundGraphics[i];

// -1 means it hasn't been processed by the canvas, which means it isn't actually drawn

if (graphic.depth == -1 || (pointer == graphic.gameObject))

continue;

Vector3 worldPos;

if (RayIntersectsRectTransform(graphic.rectTransform, ray, out worldPos))

{

//Work out where this is on the screen for compatibility with existing Unity UI code

Vector2 screenPos = eventCamera.WorldToScreenPoint(worldPos);

// mask/image intersection - See Unity docs on eventAlphaThreshold for when this does anything

if (graphic.Raycast(screenPos, eventCamera))

{

RaycastHit hit;

hit.graphic = graphic;

hit.worldPos = worldPos;

hit.fromMouse = false;

s_SortedGraphics.Add(hit);

}

}

}

s_SortedGraphics.Sort((g1, g2) => g2.graphic.depth.CompareTo(g1.graphic.depth));

for (int i = 0; i < s_SortedGraphics.Count; ++i)

{

results.Add(s_SortedGraphics[i]);

}

}

/// <summary>

/// Get screen position of worldPosition contained in this RaycastResult

/// </summary>

/// <param name="worldPosition"></param>

/// <returns></returns>

public Vector2 GetScreenPosition(RaycastResult raycastResult)

{

// In future versions of Uinty RaycastResult will contain screenPosition so this will not be necessary

return eventCamera.WorldToScreenPoint(raycastResult.worldPosition);

}

/// <summary>

/// Detects whether a ray intersects a RectTransform and if it does also

/// returns the world position of the intersection.

/// </summary>

/// <param name="rectTransform"></param>

/// <param name="ray"></param>

/// <param name="worldPos"></param>

/// <returns></returns>

static bool RayIntersectsRectTransform(RectTransform rectTransform, Ray ray, out Vector3 worldPos)

{

Vector3[] corners = new Vector3[4];

rectTransform.GetWorldCorners(corners);

Plane plane = new Plane(corners[0], corners[1], corners[2]);

float enter;

if (!plane.Raycast(ray, out enter))

{

worldPos = Vector3.zero;

return false;

}

Vector3 intersection = ray.GetPoint(enter);

Vector3 BottomEdge = corners[3] - corners[0];

Vector3 LeftEdge = corners[1] - corners[0];

float BottomDot = Vector3.Dot(intersection - corners[0], BottomEdge);

float LeftDot = Vector3.Dot(intersection - corners[0], LeftEdge);

if (BottomDot < BottomEdge.sqrMagnitude && // Can use sqrMag because BottomEdge is not normalized

LeftDot < LeftEdge.sqrMagnitude &&

BottomDot >= 0 &&

LeftDot >= 0)

{

worldPos = corners[0] + LeftDot * LeftEdge / LeftEdge.sqrMagnitude + BottomDot * BottomEdge / BottomEdge.sqrMagnitude;

return true;

}

else

{

worldPos = Vector3.zero;

return false;

}

}

struct RaycastHit

{

public Graphic graphic;

public Vector3 worldPos;

public bool fromMouse;

};

/// <summary>

/// Is this the currently focussed Raycaster according to the InputModule

/// </summary>

/// <returns></returns>

public bool IsFocussed()

{

OVRInputModule inputModule = EventSystem.current.currentInputModule as OVRInputModule;

return inputModule && inputModule.activeGraphicRaycaster == this;

}

public void OnPointerEnter(PointerEventData e)

{

PointerEventData ped = e as OVRRayPointerEventData;

if (ped != null)

{

// Gaze has entered this canvas. We'll make it the active one so that canvas-mouse pointer can be used.

OVRInputModule inputModule = EventSystem.current.currentInputModule as OVRInputModule;

inputModule.activeGraphicRaycaster = this;

}

}

}

OVRPhysicsRaycaster

using System.Collections.Generic;

namespace UnityEngine.EventSystems

{

/// <summary>

/// Simple event system using physics raycasts.

/// </summary>

[RequireComponent(typeof(OVRCameraRig))]

public class OVRPhysicsRaycaster : BaseRaycaster

{

/// <summary>

/// Const to use for clarity when no event mask is set

/// </summary>

protected const int kNoEventMaskSet = -1;

/// <summary>

/// Layer mask used to filter events. Always combined with the camera's culling mask if a camera is used.

/// </summary>

[SerializeField]

protected LayerMask m_EventMask = kNoEventMaskSet;

protected OVRPhysicsRaycaster()

{ }

public override Camera eventCamera

{

get

{

return GetComponent<OVRCameraRig>().leftEyeCamera;

}

}

/// <summary>

/// Depth used to determine the order of event processing.

/// </summary>

public virtual int depth

{

get { return (eventCamera != null) ? (int)eventCamera.depth : 0xFFFFFF; }

}

/// <summary>

/// Event mask used to determine which objects will receive events.

/// </summary>

public int finalEventMask

{

get { return (eventCamera != null) ? eventCamera.cullingMask & m_EventMask : kNoEventMaskSet; }

}

/// <summary>

/// Layer mask used to filter events. Always combined with the camera's culling mask if a camera is used.

/// </summary>

public LayerMask eventMask

{

get { return m_EventMask; }

set { m_EventMask = value; }

}

/// <summary>

/// Perform a raycast using the worldSpaceRay in eventData.

/// </summary>

/// <param name="eventData"></param>

/// <param name="resultAppendList"></param>

public override void Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList)

{

// This function is closely based on PhysicsRaycaster.Raycast

if (eventCamera == null)

return;

OVRRayPointerEventData rayPointerEventData = eventData as OVRRayPointerEventData;

if (rayPointerEventData == null)

return;

var ray = rayPointerEventData.worldSpaceRay;

float dist = eventCamera.farClipPlane - eventCamera.nearClipPlane;

var hits = Physics.RaycastAll(ray, dist, finalEventMask);

if (hits.Length > 1)

System.Array.Sort(hits, (r1, r2) => r1.distance.CompareTo(r2.distance));

if (hits.Length != 0)

{

for (int b = 0, bmax = hits.Length; b < bmax; ++b)

{

var result = new RaycastResult

{

gameObject = hits[b].collider.gameObject,

module = this,

distance = hits[b].distance,

index = resultAppendList.Count,

worldPosition = hits[0].point,

worldNormal = hits[0].normal,

};

resultAppendList.Add(result);

}

}

}

/// <summary>

/// Perform a Spherecast using the worldSpaceRay in eventData.

/// </summary>

/// <param name="eventData"></param>

/// <param name="resultAppendList"></param>

/// <param name="radius">Radius of the sphere</param>

public void Spherecast(PointerEventData eventData, List<RaycastResult> resultAppendList, float radius)

{

if (eventCamera == null)

return;

OVRRayPointerEventData rayPointerEventData = eventData as OVRRayPointerEventData;

if (rayPointerEventData == null)

return;

var ray = rayPointerEventData.worldSpaceRay;

float dist = eventCamera.farClipPlane - eventCamera.nearClipPlane;

var hits = Physics.SphereCastAll(ray, radius, dist, finalEventMask);

if (hits.Length > 1)

System.Array.Sort(hits, (r1, r2) => r1.distance.CompareTo(r2.distance));

if (hits.Length != 0)

{

for (int b = 0, bmax = hits.Length; b < bmax; ++b)

{

var result = new RaycastResult

{

gameObject = hits[b].collider.gameObject,

module = this,

distance = hits[b].distance,

index = resultAppendList.Count,

worldPosition = hits[0].point,

worldNormal = hits[0].normal,

};

resultAppendList.Add(result);

}

}

}

/// <summary>

/// Get screen position of this world position as seen by the event camera of this OVRPhysicsRaycaster

/// </summary>

/// <param name="worldPosition"></param>

/// <returns></returns>

public Vector2 GetScreenPos(Vector3 worldPosition)

{

// In future versions of Uinty RaycastResult will contain screenPosition so this will not be necessary

return eventCamera.WorldToScreenPoint(worldPosition);

}

}

}

OVRInputModule

using System;

using System.Collections.Generic;

namespace UnityEngine.EventSystems

{

public class OVRInputModule : PointerInputModule

{

[Tooltip("Object which points with Z axis. E.g. CentreEyeAnchor from OVRCameraRig")]

public Transform rayTransform;

[Tooltip("Gamepad button to act as gaze click")]

public OVRInput.Button joyPadClickButton = OVRInput.Button.One;

[Tooltip("Keyboard button to act as gaze click")]

public KeyCode gazeClickKey = KeyCode.Space;

[Header("Physics")]

[Tooltip("Perform an sphere cast to determine correct depth for gaze pointer")]

public bool performSphereCastForGazepointer;

[Tooltip("Match the gaze pointer normal to geometry normal for physics colliders")]

public bool matchNormalOnPhysicsColliders;

[Header("Gamepad Stick Scroll")]

[Tooltip("Enable scrolling with the left stick on a gamepad")]

public bool useLeftStickScroll = true;

[Tooltip("Deadzone for left stick to prevent accidental scrolling")]

public float leftStickDeadZone = 0.15f;

[Header("Touchpad Swipe Scroll")]

[Tooltip("Enable scrolling by swiping the GearVR touchpad")]

public bool useSwipeScroll = true;

[Tooltip("Minimum swipe amount to trigger scrolling")]

public float minSwipeMovement = 0;

[Tooltip("Distance scrolled when swipe scroll occurs")]

public float swipeScrollScale = 4f;

#region GearVR swipe scroll

private Vector2 swipeStartPos;

private Vector2 unusedSwipe;

#endregion

// The raycaster that gets to do pointer interaction (e.g. with a mouse), gaze interaction always works

// private OVRRaycaster _activeGraphicRaycaster;

[NonSerialized]

public OVRRaycaster activeGraphicRaycaster;

[Header("Dragging")]

[Tooltip("Minimum pointer movement in degrees to start dragging")]

public float angleDragThreshold = 1;

// The following region contains code exactly the same as the implementation

// of StandaloneInputModule. It is copied here rather than inheriting from StandaloneInputModule

// because most of StandaloneInputModule is private so it isn't possible to easily derive from.

// Future changes from Unity to StandaloneInputModule will make it possible for this class to

// derive from StandaloneInputModule instead of PointerInput module.

//

// The following functions are not present in the following region since they have modified

// versions in the next region:

// Process

// ProcessMouseEvent

// UseMouse

#region StandaloneInputModule code

private float m_NextAction;

private Vector2 m_LastMousePosition;

private Vector2 m_MousePosition;

protected OVRInputModule()

{}

void Reset()

{

allowActivationOnMobileDevice = true;

}

[Obsolete("Mode is no longer needed on input module as it handles both mouse and keyboard simultaneously.", false)]

public enum InputMode

{

Mouse,

Buttons

}

[Obsolete("Mode is no longer needed on input module as it handles both mouse and keyboard simultaneously.", false)]

public InputMode inputMode

{

get { return InputMode.Mouse; }

}

[Header("Standalone Input Module")]

[SerializeField]

private string m_HorizontalAxis = "Horizontal";

/// <summary>

/// Name of the vertical axis for movement (if axis events are used).

/// </summary>

[SerializeField]

private string m_VerticalAxis = "Vertical";

/// <summary>

/// Name of the submit button.

/// </summary>

[SerializeField]

private string m_SubmitButton = "Submit";

/// <summary>

/// Name of the submit button.

/// </summary>

[SerializeField]

private string m_CancelButton = "Cancel";

[SerializeField]

private float m_InputActionsPerSecond = 10;

[SerializeField]

private bool m_AllowActivationOnMobileDevice;

public bool allowActivationOnMobileDevice

{

get { return m_AllowActivationOnMobileDevice; }

set { m_AllowActivationOnMobileDevice = value; }

}

public float inputActionsPerSecond

{

get { return m_InputActionsPerSecond; }

set { m_InputActionsPerSecond = value; }

}

/// <summary>

/// Name of the horizontal axis for movement (if axis events are used).

/// </summary>

public string horizontalAxis

{

get { return m_HorizontalAxis; }

set { m_HorizontalAxis = value; }

}

/// <summary>

/// Name of the vertical axis for movement (if axis events are used).

/// </summary>

public string verticalAxis

{

get { return m_VerticalAxis; }

set { m_VerticalAxis = value; }

}

public string submitButton

{

get { return m_SubmitButton; }

set { m_SubmitButton = value; }

}

public string cancelButton

{

get { return m_CancelButton; }

set { m_CancelButton = value; }

}

public override void UpdateModule()

{

m_LastMousePosition = m_MousePosition;

m_MousePosition = Input.mousePosition;

}

public override bool IsModuleSupported()

{

// Check for mouse presence instead of whether touch is supported,

// as you can connect mouse to a tablet and in that case we'd want

// to use StandaloneInputModule for non-touch input events.

return m_AllowActivationOnMobileDevice || Input.mousePresent;

}

public override bool ShouldActivateModule()

{

if (!base.ShouldActivateModule())

return false;

var shouldActivate = Input.GetButtonDown(m_SubmitButton);

shouldActivate |= Input.GetButtonDown(m_CancelButton);

shouldActivate |= !Mathf.Approximately(Input.GetAxisRaw(m_HorizontalAxis), 0.0f);

shouldActivate |= !Mathf.Approximately(Input.GetAxisRaw(m_VerticalAxis), 0.0f);

shouldActivate |= (m_MousePosition - m_LastMousePosition).sqrMagnitude > 0.0f;

shouldActivate |= Input.GetMouseButtonDown(0);

return shouldActivate;

}

public override void ActivateModule()

{

base.ActivateModule();

m_MousePosition = Input.mousePosition;

m_LastMousePosition = Input.mousePosition;

var toSelect = eventSystem.currentSelectedGameObject;

if (toSelect == null)

toSelect = eventSystem.firstSelectedGameObject;

eventSystem.SetSelectedGameObject(toSelect, GetBaseEventData());

}

public override void DeactivateModule()

{

base.DeactivateModule();

ClearSelection();

}

/// <summary>

/// Process submit keys.

/// </summary>

private bool SendSubmitEventToSelectedObject()

{

if (eventSystem.currentSelectedGameObject == null)

return false;

var data = GetBaseEventData();

if (Input.GetButtonDown(m_SubmitButton))

ExecuteEvents.Execute(eventSystem.currentSelectedGameObject, data, ExecuteEvents.submitHandler);

if (Input.GetButtonDown(m_CancelButton))

ExecuteEvents.Execute(eventSystem.currentSelectedGameObject, data, ExecuteEvents.cancelHandler);

return data.used;

}

private bool AllowMoveEventProcessing(float time)

{

bool allow = Input.GetButtonDown(m_HorizontalAxis);

allow |= Input.GetButtonDown(m_VerticalAxis);

allow |= (time > m_NextAction);

return allow;

}

private Vector2 GetRawMoveVector()

{

Vector2 move = Vector2.zero;

move.x = Input.GetAxisRaw(m_HorizontalAxis);

move.y = Input.GetAxisRaw(m_VerticalAxis);

if (Input.GetButtonDown(m_HorizontalAxis))

{

if (move.x < 0)

move.x = -1f;

if (move.x > 0)

move.x = 1f;

}

if (Input.GetButtonDown(m_VerticalAxis))

{

if (move.y < 0)

move.y = -1f;

if (move.y > 0)

move.y = 1f;

}

return move;

}

/// <summary>

/// Process keyboard events.

/// </summary>

private bool SendMoveEventToSelectedObject()

{

float time = Time.unscaledTime;

if (!AllowMoveEventProcessing(time))

return false;

Vector2 movement = GetRawMoveVector();

// Debug.Log(m_ProcessingEvent.rawType + " axis:" + m_AllowAxisEvents + " value:" + "(" + x + "," + y + ")");

var axisEventData = GetAxisEventData(movement.x, movement.y, 0.6f);

if (!Mathf.Approximately(axisEventData.moveVector.x, 0f)

|| !Mathf.Approximately(axisEventData.moveVector.y, 0f))

{

ExecuteEvents.Execute(eventSystem.currentSelectedGameObject, axisEventData, ExecuteEvents.moveHandler);

}

m_NextAction = time + 1f / m_InputActionsPerSecond;

return axisEventData.used;

}

private bool SendUpdateEventToSelectedObject()

{

if (eventSystem.currentSelectedGameObject == null)

return false;

var data = GetBaseEventData();

ExecuteEvents.Execute(eventSystem.currentSelectedGameObject, data, ExecuteEvents.updateSelectedHandler);

return data.used;

}

/// <summary>

/// Process the current mouse press.

/// </summary>

private void ProcessMousePress(MouseButtonEventData data)

{

var pointerEvent = data.buttonData;

var currentOverGo = pointerEvent.pointerCurrentRaycast.gameObject;

// PointerDown notification

if (data.PressedThisFrame())

{

pointerEvent.eligibleForClick = true;

pointerEvent.delta = Vector2.zero;

pointerEvent.dragging = false;

pointerEvent.useDragThreshold = true;

pointerEvent.pressPosition = pointerEvent.position;

pointerEvent.pointerPressRaycast = pointerEvent.pointerCurrentRaycast;

DeselectIfSelectionChanged(currentOverGo, pointerEvent);

// search for the control that will receive the press

// if we can't find a press handler set the press

// handler to be what would receive a click.

var newPressed = ExecuteEvents.ExecuteHierarchy(currentOverGo, pointerEvent, ExecuteEvents.pointerDownHandler);

// didnt find a press handler... search for a click handler

if (newPressed == null)

newPressed = ExecuteEvents.GetEventHandler<IPointerClickHandler>(currentOverGo);

// Debug.Log("Pressed: " + newPressed);

float time = Time.unscaledTime;

if (newPressed == pointerEvent.lastPress)

{

var diffTime = time - pointerEvent.clickTime;

if (diffTime < 0.3f)

++pointerEvent.clickCount;

else

pointerEvent.clickCount = 1;

pointerEvent.clickTime = time;

}

else

{

pointerEvent.clickCount = 1;

}

pointerEvent.pointerPress = newPressed;

pointerEvent.rawPointerPress = currentOverGo;

pointerEvent.clickTime = time;

// Save the drag handler as well

pointerEvent.pointerDrag = ExecuteEvents.GetEventHandler<IDragHandler>(currentOverGo);

if (pointerEvent.pointerDrag != null)

ExecuteEvents.Execute(pointerEvent.pointerDrag, pointerEvent, ExecuteEvents.initializePotentialDrag);

}

// PointerUp notification

if (data.ReleasedThisFrame())

{

// Debug.Log("Executing pressup on: " + pointer.pointerPress);

ExecuteEvents.Execute(pointerEvent.pointerPress, pointerEvent, ExecuteEvents.pointerUpHandler);

// Debug.Log("KeyCode: " + pointer.eventData.keyCode);

// see if we mouse up on the same element that we clicked on...

var pointerUpHandler = ExecuteEvents.GetEventHandler<IPointerClickHandler>(currentOverGo);

// PointerClick and Drop events

if (pointerEvent.pointerPress == pointerUpHandler && pointerEvent.eligibleForClick)

{

ExecuteEvents.Execute(pointerEvent.pointerPress, pointerEvent, ExecuteEvents.pointerClickHandler);

}

else if (pointerEvent.pointerDrag != null)

{

ExecuteEvents.ExecuteHierarchy(currentOverGo, pointerEvent, ExecuteEvents.dropHandler);

}

pointerEvent.eligibleForClick = false;

pointerEvent.pointerPress = null;

pointerEvent.rawPointerPress = null;

if (pointerEvent.pointerDrag != null && pointerEvent.dragging)

ExecuteEvents.Execute(pointerEvent.pointerDrag, pointerEvent, ExecuteEvents.endDragHandler);

pointerEvent.dragging = false;

pointerEvent.pointerDrag = null;

// redo pointer enter / exit to refresh state

// so that if we moused over somethign that ignored it before

// due to having pressed on something else

// it now gets it.

if (currentOverGo != pointerEvent.pointerEnter)

{

HandlePointerExitAndEnter(pointerEvent, null);

HandlePointerExitAndEnter(pointerEvent, currentOverGo);

}

}

}

#endregion

#region Modified StandaloneInputModule methods

/// <summary>

/// Process all mouse events. This is the same as the StandaloneInputModule version except that

/// it takes MouseState as a parameter, allowing it to be used for both Gaze and Mouse

/// pointerss.

/// </summary>

private void ProcessMouseEvent(MouseState mouseData)

{

var pressed = mouseData.AnyPressesThisFrame();

var released = mouseData.AnyReleasesThisFrame();

var leftButtonData = mouseData.GetButtonState(PointerEventData.InputButton.Left).eventData;

if (!UseMouse(pressed, released, leftButtonData.buttonData))

return;

// Process the first mouse button fully

ProcessMousePress(leftButtonData);

ProcessMove(leftButtonData.buttonData);

ProcessDrag(leftButtonData.buttonData);

// Now process right / middle clicks

ProcessMousePress(mouseData.GetButtonState(PointerEventData.InputButton.Right).eventData);

ProcessDrag(mouseData.GetButtonState(PointerEventData.InputButton.Right).eventData.buttonData);

ProcessMousePress(mouseData.GetButtonState(PointerEventData.InputButton.Middle).eventData);

ProcessDrag(mouseData.GetButtonState(PointerEventData.InputButton.Middle).eventData.buttonData);

if (!Mathf.Approximately(leftButtonData.buttonData.scrollDelta.sqrMagnitude, 0.0f))

{

var scrollHandler = ExecuteEvents.GetEventHandler<IScrollHandler>(leftButtonData.buttonData.pointerCurrentRaycast.gameObject);

ExecuteEvents.ExecuteHierarchy(scrollHandler, leftButtonData.buttonData, ExecuteEvents.scrollHandler);

}

}

/// <summary>

/// Process this InputModule. Same as the StandaloneInputModule version, except that it calls

/// ProcessMouseEvent twice, once for gaze pointers, and once for mouse pointers.

/// </summary>

public override void Process()

{

bool usedEvent = SendUpdateEventToSelectedObject();

if (eventSystem.sendNavigationEvents)

{

if (!usedEvent)

usedEvent |= SendMoveEventToSelectedObject();

if (!usedEvent)

SendSubmitEventToSelectedObject();

}

ProcessMouseEvent(GetGazePointerData());

#if !UNITY_ANDROID

ProcessMouseEvent(GetCanvasPointerData());

#endif

}

/// <summary>

/// Decide if mouse events need to be processed this frame. Same as StandloneInputModule except

/// that the IsPointerMoving method from this class is used, instead of the method on PointerEventData

/// </summary>

private static bool UseMouse(bool pressed, bool released, PointerEventData pointerData)

{

if (pressed || released || IsPointerMoving(pointerData) || pointerData.IsScrolling())

return true;

return false;

}

#endregion

/// <summary>

/// Convenience function for cloning PointerEventData

/// </summary>

/// <param name="from">Copy this value</param>

/// <param name="to">to this object</param>

protected void CopyFromTo(OVRRayPointerEventData @from, OVRRayPointerEventData @to)

{

@to.position = @from.position;

@to.delta = @from.delta;

@to.scrollDelta = @from.scrollDelta;

@to.pointerCurrentRaycast = @from.pointerCurrentRaycast;

@to.pointerEnter = @from.pointerEnter;

@to.worldSpaceRay = @from.worldSpaceRay;

}

/// <summary>

/// Convenience function for cloning PointerEventData

/// </summary>

/// <param name="from">Copy this value</param>

/// <param name="to">to this object</param>

protected void CopyFromTo(PointerEventData @from, PointerEventData @to)

{

@to.position = @from.position;

@to.delta = @from.delta;

@to.scrollDelta = @from.scrollDelta;

@to.pointerCurrentRaycast = @from.pointerCurrentRaycast;

@to.pointerEnter = @from.pointerEnter;

}

// In the following region we extend the PointerEventData system implemented in PointerInputModule

// We define an additional dictionary for ray(e.g. gaze) based pointers. Mouse pointers still use the dictionary

// in PointerInputModule

#region PointerEventData pool

// Pool for OVRRayPointerEventData for ray based pointers

protected Dictionary<int, OVRRayPointerEventData> m_VRRayPointerData = new Dictionary<int, OVRRayPointerEventData>();

protected bool GetPointerData(int id, out OVRRayPointerEventData data, bool create)

{

if (!m_VRRayPointerData.TryGetValue(id, out data) && create)

{

data = new OVRRayPointerEventData(eventSystem)

{

pointerId = id,

};

m_VRRayPointerData.Add(id, data);

return true;

}

return false;

}

/// <summary>

/// Clear pointer state for both types of pointer

/// </summary>

protected new void ClearSelection()

{

var baseEventData = GetBaseEventData();

foreach (var pointer in m_PointerData.Values)

{

// clear all selection

HandlePointerExitAndEnter(pointer, null);

}

foreach (var pointer in m_VRRayPointerData.Values)

{

// clear all selection

HandlePointerExitAndEnter(pointer, null);

}

m_PointerData.Clear();

eventSystem.SetSelectedGameObject(null, baseEventData);

}

#endregion

/// <summary>

/// For RectTransform, calculate it's normal in world space

/// </summary>

static Vector3 GetRectTransformNormal(RectTransform rectTransform)

{

Vector3[] corners = new Vector3[4];

rectTransform.GetWorldCorners(corners);

Vector3 BottomEdge = corners[3] - corners[0];

Vector3 LeftEdge = corners[1] - corners[0];

rectTransform.GetWorldCorners(corners);

return Vector3.Cross(LeftEdge, BottomEdge).normalized;

}

private readonly MouseState m_MouseState = new MouseState();

// Overridden so that we can process the two types of pointer separately

// The following 2 functions are equivalent to PointerInputModule.GetMousePointerEventData but are customized to

// get data for ray pointers and canvas mouse pointers.

/// <summary>

/// State for a pointer controlled by a world space ray. E.g. gaze pointer

/// </summary>

/// <returns></returns>

protected MouseState GetGazePointerData()

{

// Get the OVRRayPointerEventData reference

OVRRayPointerEventData leftData;

GetPointerData(kMouseLeftId, out leftData, true );

leftData.Reset();

//Now set the world space ray. This ray is what the user uses to point at UI elements

leftData.worldSpaceRay = new Ray(rayTransform.position, rayTransform.forward);

leftData.scrollDelta = GetExtraScrollDelta();

//Populate some default values

leftData.button = PointerEventData.InputButton.Left;

leftData.useDragThreshold = true;

// Perform raycast to find intersections with world

eventSystem.RaycastAll(leftData, m_RaycastResultCache);

var raycast = FindFirstRaycast(m_RaycastResultCache);

leftData.pointerCurrentRaycast = raycast;

m_RaycastResultCache.Clear();

OVRRaycaster ovrRaycaster = raycast.module as OVRRaycaster;

// We're only interested in intersections from OVRRaycasters

if (ovrRaycaster)

{

// The Unity UI system expects event data to have a screen position

// so even though this raycast came from a world space ray we must get a screen

// space position for the camera attached to this raycaster for compatability

leftData.position = ovrRaycaster.GetScreenPosition(raycast);

// Find the world position and normal the Graphic the ray intersected

RectTransform graphicRect = raycast.gameObject.GetComponent<RectTransform>();

if (graphicRect != null)

{

// Set are gaze indicator with this world position and normal

Vector3 worldPos = raycast.worldPosition;

Vector3 normal = GetRectTransformNormal(graphicRect);

OVRGazePointer.instance.SetPosition(worldPos, normal);

// Make sure it's being shown

OVRGazePointer.instance.RequestShow();

}

}

OVRPhysicsRaycaster physicsRaycaster = raycast.module as OVRPhysicsRaycaster;

if (physicsRaycaster)

{

leftData.position = physicsRaycaster.GetScreenPos(raycast.worldPosition);

OVRGazePointer.instance.RequestShow();

OVRGazePointer.instance.SetPosition(raycast.worldPosition, raycast.worldNormal);

}

// Stick default data values in right and middle slots for compatability

// copy the apropriate data into right and middle slots

OVRRayPointerEventData rightData;

GetPointerData(kMouseRightId, out rightData, true );

CopyFromTo(leftData, rightData);

rightData.button = PointerEventData.InputButton.Right;

OVRRayPointerEventData middleData;

GetPointerData(kMouseMiddleId, out middleData, true );

CopyFromTo(leftData, middleData);

middleData.button = PointerEventData.InputButton.Middle;

m_MouseState.SetButtonState(PointerEventData.InputButton.Left, GetGazeButtonState(), leftData);

m_MouseState.SetButtonState(PointerEventData.InputButton.Right, PointerEventData.FramePressState.NotChanged, rightData);

m_MouseState.SetButtonState(PointerEventData.InputButton.Middle, PointerEventData.FramePressState.NotChanged, middleData);

return m_MouseState;

}

/// <summary>

/// Get state for pointer which is a pointer moving in world space across the surface of a world space canvas.

/// </summary>

/// <returns></returns>

protected MouseState GetCanvasPointerData()

{

// Get the OVRRayPointerEventData reference

PointerEventData leftData;

GetPointerData(kMouseLeftId, out leftData, true );

leftData.Reset();

// Setup default values here. Set position to zero because we don't actually know the pointer

// positions. Each canvas knows the position of its canvas pointer.

leftData.position = Vector2.zero;

leftData.scrollDelta = Input.mouseScrollDelta;

leftData.button = PointerEventData.InputButton.Left;

if (activeGraphicRaycaster)

{

// Let the active raycaster find intersections on its canvas

activeGraphicRaycaster.RaycastPointer(leftData, m_RaycastResultCache);

var raycast = FindFirstRaycast(m_RaycastResultCache);

leftData.pointerCurrentRaycast = raycast;

m_RaycastResultCache.Clear();

OVRRaycaster ovrRaycaster = raycast.module as OVRRaycaster;

if (ovrRaycaster) // raycast may not actually contain a result

{

// The Unity UI system expects event data to have a screen position

// so even though this raycast came from a world space ray we must get a screen

// space position for the camera attached to this raycaster for compatability

Vector2 position = ovrRaycaster.GetScreenPosition(raycast);

leftData.delta = position - leftData.position;

leftData.position = position;

}

}

// copy the apropriate data into right and middle slots

PointerEventData rightData;

GetPointerData(kMouseRightId, out rightData, true );

CopyFromTo(leftData, rightData);

rightData.button = PointerEventData.InputButton.Right;

PointerEventData middleData;

GetPointerData(kMouseMiddleId, out middleData, true );

CopyFromTo(leftData, middleData);

middleData.button = PointerEventData.InputButton.Middle;

m_MouseState.SetButtonState(PointerEventData.InputButton.Left, StateForMouseButton(0), leftData);

m_MouseState.SetButtonState(PointerEventData.InputButton.Right, StateForMouseButton(1), rightData);

m_MouseState.SetButtonState(PointerEventData.InputButton.Middle, StateForMouseButton(2), middleData);

return m_MouseState;

}

/// <summary>

/// New version of ShouldStartDrag implemented first in PointerInputModule. This version differs in that

/// for ray based pointers it makes a decision about whether a drag should start based on the angular change

/// the pointer has made so far, as seen from the camera. This also works when the world space ray is

/// translated rather than rotated, since the beginning and end of the movement are considered as angle from

/// the same point.

/// </summary>

private bool ShouldStartDrag(PointerEventData pointerEvent)

{

if (!pointerEvent.useDragThreshold)

return true;

if (pointerEvent as OVRRayPointerEventData == null)

{

// Same as original behaviour for canvas based pointers

return (pointerEvent.pressPosition - pointerEvent.position).sqrMagnitude >= eventSystem.pixelDragThreshold * eventSystem.pixelDragThreshold;

}

else

{

// When it's not a screen space pointer we have to look at the angle it moved rather than the pixels distance

// For gaze based pointing screen-space distance moved will always be near 0

Vector3 cameraPos = pointerEvent.pressEventCamera.transform.position;

Vector3 pressDir = (pointerEvent.pointerPressRaycast.worldPosition - cameraPos).normalized;

Vector3 currentDir = (pointerEvent.pointerCurrentRaycast.worldPosition - cameraPos).normalized;

return Vector3.Dot(pressDir, currentDir) < Mathf.Cos(Mathf.Deg2Rad * (angleDragThreshold));

}

}

/// <summary>

/// The purpose of this function is to allow us to switch between using the standard IsPointerMoving

/// method for mouse driven pointers, but to always return true when it's a ray based pointer.

/// All real-world ray-based input devices are always moving so for simplicity we just return true

/// for them.

///

/// If PointerEventData.IsPointerMoving was virtual we could just override that in

/// OVRRayPointerEventData.

/// </summary>

/// <param name="pointerEvent"></param>

/// <returns></returns>

static bool IsPointerMoving(PointerEventData pointerEvent)

{

OVRRayPointerEventData rayPointerEventData = pointerEvent as OVRRayPointerEventData;

if (rayPointerEventData != null)

return true;

else

return pointerEvent.IsPointerMoving();

}

/// <summary>

/// Exactly the same as the code from PointerInputModule, except that we call our own

/// IsPointerMoving.

///

/// This would also not be necessary if PointerEventData.IsPointerMoving was virtual

/// </summary>

/// <param name="pointerEvent"></param>

protected override void ProcessDrag(PointerEventData pointerEvent)

{

bool moving = IsPointerMoving(pointerEvent);

if (moving && pointerEvent.pointerDrag != null

&& !pointerEvent.dragging

&& ShouldStartDrag(pointerEvent))

{

ExecuteEvents.Execute(pointerEvent.pointerDrag, pointerEvent, ExecuteEvents.beginDragHandler);

pointerEvent.dragging = true;

}

// Drag notification

if (pointerEvent.dragging && moving && pointerEvent.pointerDrag != null)

{

// Before doing drag we should cancel any pointer down state

// And clear selection!

if (pointerEvent.pointerPress != pointerEvent.pointerDrag)

{

ExecuteEvents.Execute(pointerEvent.pointerPress, pointerEvent, ExecuteEvents.pointerUpHandler);

pointerEvent.eligibleForClick = false;

pointerEvent.pointerPress = null;

pointerEvent.rawPointerPress = null;

}

ExecuteEvents.Execute(pointerEvent.pointerDrag, pointerEvent, ExecuteEvents.dragHandler);

}

}

/// <summary>

/// Get state of button corresponding to gaze pointer

/// </summary>

/// <returns></returns>

protected PointerEventData.FramePressState GetGazeButtonState()

{

var pressed = Input.GetKeyDown(gazeClickKey) || OVRInput.GetDown(joyPadClickButton);

var released = Input.GetKeyUp(gazeClickKey) || OVRInput.GetUp(joyPadClickButton);

#if UNITY_ANDROID && !UNITY_EDITOR

pressed |= Input.GetMouseButtonDown(0);

released |= Input.GetMouseButtonUp(0);

#endif

if (pressed && released)

return PointerEventData.FramePressState.PressedAndReleased;

if (pressed)

return PointerEventData.FramePressState.Pressed;

if (released)

return PointerEventData.FramePressState.Released;

return PointerEventData.FramePressState.NotChanged;

}

/// <summary>

/// Get extra scroll delta from gamepad

/// </summary>

protected Vector2 GetExtraScrollDelta()

{

Vector2 scrollDelta = new Vector2();

if (useLeftStickScroll)

{

float x = OVRInput.Get(OVRInput.Axis2D.PrimaryThumbstick).x;

float y = OVRInput.Get(OVRInput.Axis2D.PrimaryThumbstick).y;

if (Mathf.Abs(x) < leftStickDeadZone) x = 0;

if (Mathf.Abs(y) < leftStickDeadZone) y = 0;

scrollDelta = new Vector2 (x,y);

}

return scrollDelta;

}

};

}